Qual-at-Scale is the Future...or is it?

Behind the Trendy Method Secretly Paving the Way for Synthetic

I’ve commented many times about the challenges on the consumer side of the research equation. Most everyone in the space acknowledges that most research we put in front of consumers is boring. Running panels is something I’ve spent some time on and if there’s one universal truth, it’s that the traditional survey experience is, to put it mildly, a soul-sucking chore. We’ve optimized the life out of it, turning human feedback into a sterile, joyless transaction.

This challenge isn’t new. We have known about it for decades, and people have attempted to hone the experience (chat bots anyone?), but these attempts haven’t delivered on their promise.

Yet, I’ve had enough conversations with language models to imagine a world where instead of ticking boxes, research will revolve around genuine conversations. A friendly, engaging chat with an AI moderator that listens, probes, and understands nuance. This solution no longer feels like science fiction; this is the promise of “qualitative at scale.” It’s the dream of getting rich human insights through natural conversations that are navigated by AI systems designed to get the insights clients are chasing. Enabled in a SaaS-like model without the logistical nightmare of a thousand focus groups, it’s faster, more engaging, and frankly, a much more human way to do research.

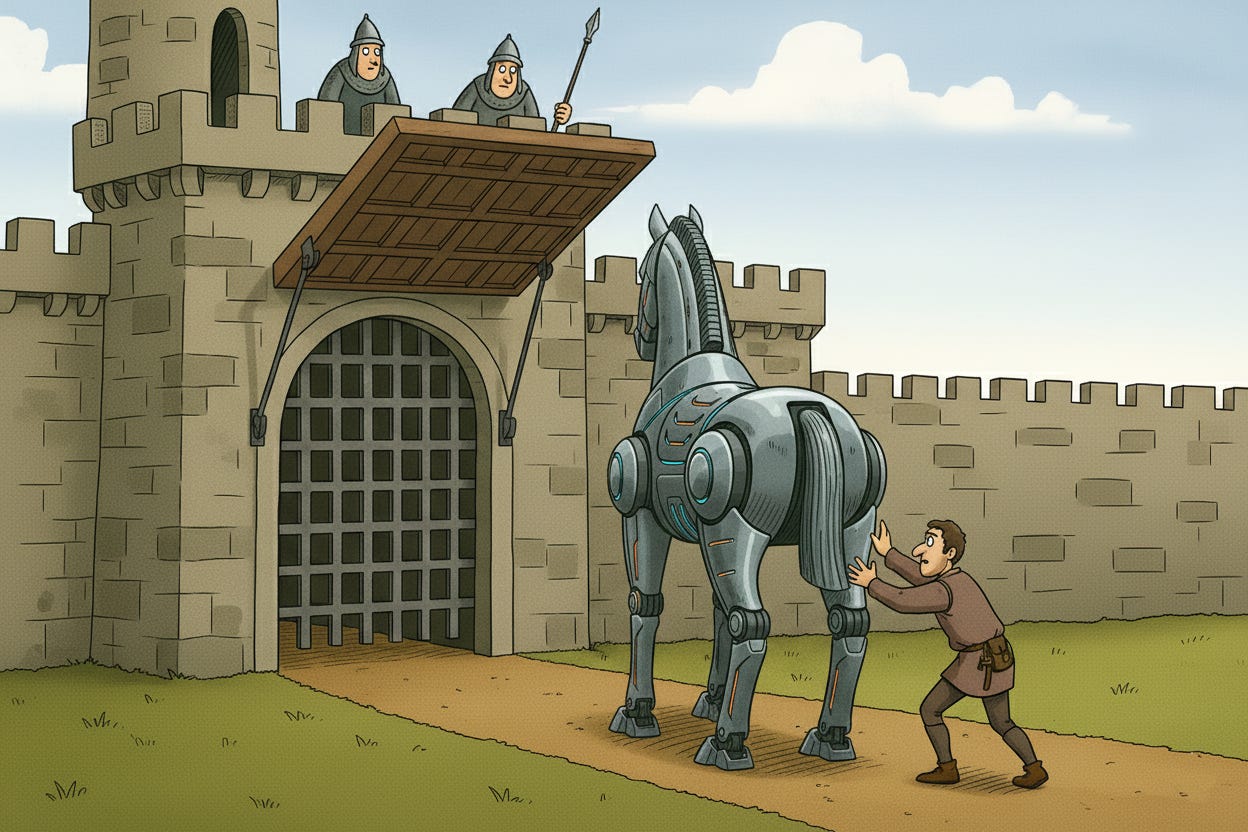

Sounds like the perfect evolution for an industry desperate for a better way. Yet qual-at-scale faces the same uphill battle that previous solutions to a more engaging consumer experience faced: the client. Meeting client requirements rarely aligns with fun consumer experiences, but that’s not stopping the start-ups. As a result, this beautiful, respondent-friendly future is rolling a Trojan horse up to the client’s gates, and what’s inside changes the game in a way nobody’s ready for.

The Dream: Qual-at-Scale Enters the Chat

The idea behind qual-at-scale is simple and brilliant. Use AI agents as moderators to conduct thousands of in-depth interviews. No longer are consumers railroaded into a research experience that they find disengaging. The AI moderator will work to ensure critical topics are discussed, even if conversations wander. The benefits are obvious. Respondents get a better experience, which means higher quality engagement (aka better data) and less fraud. Researchers get the “why” behind the “what”, the rich, unstructured data that only comes from small-scale qualitative work.

For an industry that’s been stuck in a rut of five-point scales and a lack of survey design standards, this feels like a revolution. It’s a chance to move beyond the sterile, quantitative box-ticking that has defined market research for decades.

The Sobering Reality: Clients Love Numbers

There’s one tiny problem. Clients depend on numbers.

According to recent data, online qual accounts for less than half (40%) of spend on qualitative research. The free food and networking behind the two-way mirror are still a better experience compared to a chat session.

But the more important number is 6%… the total share of client spend on online qualitative research. Conversely, 85% of insights spending is quantitative1. Why? Because the data that drives big decisions is in tracking studies, segmentation, and other large-scale quantitative methods.

Clients want charts that show trend lines going up and to the right. Evidentiary proof they’re succeeding in their jobs. Management wants statistically significant numbers to validate decisions. The CMO wants an OKR that proves they are a good steward of billions of ad dollars. Everyone needs a reliable number, a neat little box to check. Selling them on the beauty of unstructured conversational data is like convincing a Wall Street trader to ditch their Bloomberg terminal for poetry. They will appreciate the art, but they can’t build a financial model with it.

For qual-at-scale to become more than a niche player in the “adjudicate choices” or “tracking choices” phase, it has to solve the client’s problem. It has to give them the numbers they crave.

Bringing AI Inside the Gates

This is where the Trojan Horse gets an upgrade. A recent paper making the rounds showed something fascinating: open-ended text used by AI models accurately predicts purchase intent Likert scale (e.g. 1-to-5) scores. Astounding results that matched the outcomes of 57 Colgate-Palmolive traditional concept tests.

Think about that. An AI moderator has a chat with a consumer about a new product, and at the end, it predicts how that person would have rated it on a 1-to-5 purchase intent scale. This is the magic bullet. You get the rich, human-centric experience of a qualitative interview, and the client gets the quantitative data they need for their dashboards. Everyone wins, right?

Well, not exactly.

The Unintended Consequence

As I’ve already pointed out, the biggest gap in qual-at-scale is the need for direct metrics. Any tech that accurately converts a free-form unstructured conversation into Likert ratings will be a panacea for qualitative research. But there’s one catch. The AI in the aforementioned study did indeed accurately predict Likert ratings from open-ended text, but the LLM synthetically created the open-ended text.

There’s that nasty synthetic word again.

The study wasn’t about predicting Likert scores from open-ended text; it was about training LLMs to predict Likert scores synthetically. In a method they called direct Likert rating (DLR), the authors of the paper found that the LLM was moderately successful in predicting Likert scores (80% correlation). However, they found that the better method, follow-up Likert rating (FLR), achieved a peak 90% correlation. The key to FLR? Letting the LLM write a brief textual response about purchase intent from the point of view of a synthetic respondent then using that text to predict the purchase intent rating (it’s a bit more complicated so read the paper to learn what they did).

Here’s the irony that’s been rattling around in my head. If the qual-at-scale companies want to grow against the full pie and not just their 15%, they’re going to power ahead with using open-ended text to predict Likert ratings. Yet, in the process of teaching an AI to understand human conversation so deeply that it translates messy, emotional, human chatter into a clean 1-to-5 rating, we are also teaching it something else: how to be a human respondent.

Every time we validate that the AI’s predicted score matches an actual human’s score, we’re not improving a tool; we’re building a simulation. We’re creating a model that will, with increasing accuracy, replicate human preferences and opinions without the human.

This pours gasoline on the fire of the synthetic data revolution, something I’ve talked about in Why Vibe Insights is the Future. The race isn’t just about getting better data from humans. It’s a battle between two futures:

Qual-at-Scale: Using AI to have better conversations with real people.

Synthetic Insights: Using AI to generate responses from virtual people.

Both are chasing the same investment dollars. Both promise to solve the scale and cost problems of traditional research. But one is perfecting the human feedback loop, while the other is replacing it.

So, What’s the Play?

This isn’t a simple good-versus-evil story. Qual-at-Scale is a necessary and powerful evolution. It offers a path away from the dumpster fire that consumer panels have become, something I’ve lamented in Consumer Panels are a S**tshow. Now there’s new hope; a better way to engage with people.

But let’s be pragmatic. Qual has been the smallest portion of the research pie for reasons that have little to do with the actual consumer experience. Qual is a method for clients to deploy, one they do see value in but one which comes with an asterisk. It’s seen as engaged research that isn’t statistically projectable. Clients have a use for that type of research, but it’s not top of the list of methods they deploy.

The business case for pure synthetic data is brutally efficient. It works for everything, it’s scalable, fast, and cheap. As AI gets better, the temptation for clients to opt for a “good enough” synthetic answer over a more expensive human one will be immense.

I believe the future isn’t one or the other, but a hybrid.

I might seem against qual-at-scale, but that’s not true. The qual-at-scale market will likely dominate the “Explorers” family of research, which requires genuine creativity, unexpected tangents, and the messy, brilliant spark of human insight. This is where you discover what you don’t know. The enormous challenge standing in the way is the need to retrain the market to look to a method that is ignored for big-dollar decision making. This retraining will take years, if not a decade, so don’t expect overnight success.

Synthetics, on the other hand, will take over the more repetitive, predictable parts of the industry. Think tracking studies, simple ad tests, and anything where the parameters are well-defined.

The smartest companies won’t pick a side. They’ll use high-quality, engaging qual-at-scale to train, validate, and continuously refine their synthetic models. The human conversations become the ground truth, the R&D engine that makes the synthetic data not just plausible, but powerful. This creates a defensible moat that pure-play synthetic companies, running on generic data, will struggle to cross.

The Bottom Line

Qual-at-Scale is one of the most exciting developments in our industry. It promises a future where research is more human, more engaging, and more insightful. Succeeding in this space will be tough enough with clients leaning on old habits. And in our rush to solve that problem and build this better future, we might be building a Trojan horse filled not with humans but with the very thing that makes humans obsolete.

The next few years will be a fascinating, chaotic race. Two paths are clear, and they are diverging fast. The question is, are you betting on having better conversations with humans, or are you betting on an AI that’s simply learned to have a better conversation with itself?

https://www.statista.com/statistics/267225/global-market-research-highest-revenue-sources-by-service-type/

It may be that we see the best qual-at-scale firms pivoting to synthetic as their model does a decent job of providing the inputs for the synthetic bridge I refer to above.

I've had inbound queries from several investment firms looking at the research space trying to understand if this is the future. This is what I've been telling them. I'm bullish on qual-at-scale in the long run. Bearish in the short term. On top of it all, there's too many firms competing to win the qual-at-scale race.