An Approachable Deeper Dive into AI Models

A little more nuanced

If you haven’t yet read part one, you can find it at this link.

This document is here to provide you with the ability to wrap your head around the various foundational models discussed in the previous article. As promised it’s all here and written to be relatively easy to digest. However, since we’re talking about a lot of information you can use the following links to help navigate the document:

How AI Works

Modern AI models are built on some powerful ideas that have been in development for decades. One of the most impactful approaches powering a ton of AI systems is the neural network. Modeled on the human brain, think of these like digital brains made up of connected layers that pass information forward in a thinking process and tweak themselves when mistakes are made. That tweaking process? It’s called backpropagation, the model learns by adjusting its settings to get better each time, it’s kinda like a student who fixes wrong answers on a quiz and ends up learning the content better for next time. Over time these AI systems get smarter and more accurate. This idea powers most of what you see today, from image recognition to chatbots like ChatGPT. It's the secret sauce behind models that can talk, draw, and even code. Without this technique, modern AI as we know it wouldn't exist.

Then there’s the stats nerd in the family: probabilistic models. These models aren’t just guessing, they’re weighing possibilities. It’s like when you think, “If I get a D in history, there’s a 80% chance my parents will be mad.” These models help AI understand relationships and uncertainty, which is super useful in fields like science, finance, and medicine. They’re also used in causal reasoning, helping AI figure out why something happened, not just what happened. And then we’ve got reinforcement learning, which is basically AI learning from experience. This is easy for most people to understand since it’s the computer’s version of Pavlov’s dog. Imagine a robot playing a video game, it gets points for doing well, and learns to avoid making bad moves. That reward system helps the robot get better and better over time.

What’s cool is that today’s best AI systems don’t just use one of these ideas, they bring multiple techniques to the table. Some combine neural networks with probabilities, others mix prediction with memory, vision, or decision-making. It’s like giving a robot not just a brain, but instincts, reasoning skills, a good sense of outcomes, and the ability to learn from mistakes. For example, a model like ChatGPT uses neural networks, a dash of information theory, and loads of data to sound human. Others use attention mechanisms and training tricks inspired by how we compress and transmit information efficiently. That combo of different tools and techniques is what makes modern AI models so smart as well as good at solving real-world problems in everything from medicine to music.

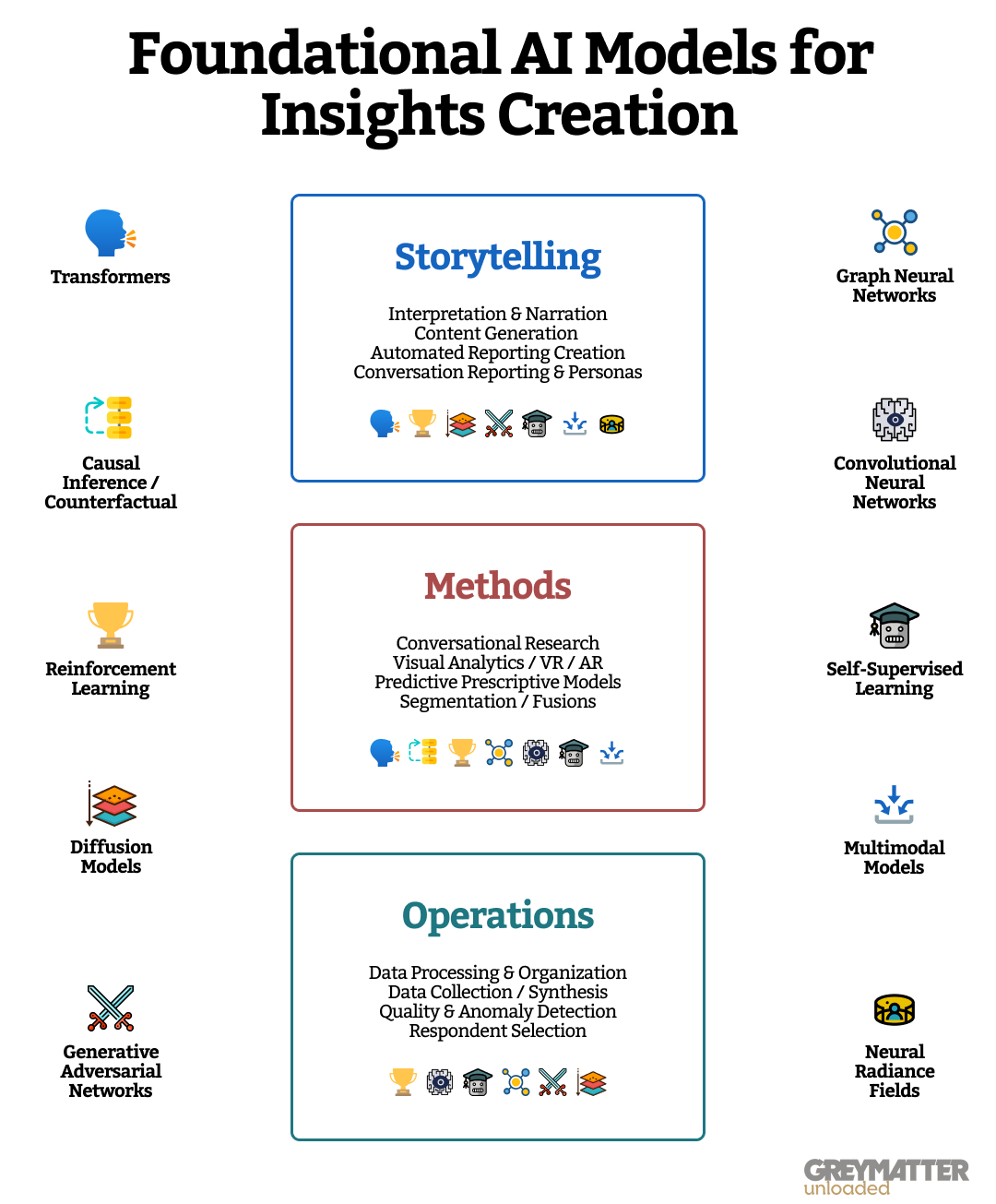

So while I’ve highlighted below what I believe are the 10 most impactful models of the day, know that these approaches have grown from well established roots and advancements in a few core fields. What’s next in the space, well I’ve speculated on that near the bottom of this article.

The Models in a Little More Detail

Transformers (Not Just Talkers)

These are the models we're often referring to when we talk about AI today. Why? Because they're everywhere. It's even hiding in the acronym GPT: Generative, Pretrained, Transformer. Some of them are big and proprietary (ChatGPT) and some are built on open source tech (LLaMA). Regardless, the AI innovation behind the scene is a Transformer model. Innovation happens here because it's easy to see the benefit of having a AI system that is fluent in languages that matter (e.g. English, French, JavaScript) and has an incredible understanding of the world's corpus of knowledge.

Best examples: ChatGPT, Claude, Gemini, Mistral, LLaMA

What they do: These models understand and generate sequences. Their superpower is predicting what comes next in a sequence, especially in language, making them pattern recognition pros in speech, writing, music and code. We do this ourselves with a little less math: "Peter Piper Picked a ____" is a pretty easy sentence for anyone brought up speaking english. In the same way a transformer is making the same kinds of predictions for how words go together to make language work.

Where they struggle: They're bad at things that are not predictive. Think math. The answer to 948+276 does not require a prediction, it's a straightforward calculation. When humans solve math problems we follow a series of steps to get to the answer. Transformer models don't follow steps, they make fast predictions which is why as math gets more complicated they often make incorrect guesses. They're not designed for step by step processes. This also why you'll hear stories of large language models hallucinating answers to questions that are patently untrue. Because they're making predictions, some of those predictions are bound to be wrong.

The one sentence use case: You need a super knowledgeable assistant that can converse with a user to deliver information in a conversant manner.

How to use for insights? This has been explored by a significant number of companies and start-ups both in the Insights space as well as adjacent spaces. To make this work well you will want to pair a large language model with your data (MCP or RAG). Examples of this applied to insights include:

Chat with data: Most clients hate crosstabs. This allows customers to ask a LLM questions about your data without the hassle of knowing how to navigate a confusing crosstabbing tool.

AI personas: Tell the AI system to pretend it is a particular type of person in your data. Customers can then chat with the AI as if they are that person. The AI uses your data to answer.

Enhanced search: Take advantage of the predictive power of the transformer to better predict what the user is trying to ask.

Reasoned insights: Not only can transformer models return data based on a user question, they can also reason the meaning behind that data. Put this together with automation tools to generate reports and findings without needing an analyst.

Qualitative: Chatbots and qual have been around for a long time but as you can imagine a guided research conversation with a LLM is a much more scalable and engaging experience for respondents.

Revised survey experience: No one has cracked the actual survey experience yet but many are working to turn traditional quant surveys into better experiences with LLMs.

Copy generation: Use LLMs trained on consumer preference data to create advertising copy or scripts that highlight nuances in consumer preferences.

Accessibility:

Model Maturity: Fairly Mature

Talent Pool: Abundant

Implementation Complexity: Somewhat Difficult

Want to learn more? Watch the first 2 chapters of this video for a great visual representation

Causal Inference / Counterfactual AI

A lot of the aforementioned models are designed to look at relationships and make predictions. Causal Inference and Counterfactual AI are advanced methodologies in AI and statistics focused on understanding cause-and-effect relationships rather than mere correlations. This technique more so than the others is focused on enabling more robust decision-making and predictions. Causal inference identifies how changes in one variable (e.g., saw an ad) directly affect another (e.g., made a purchase), while counterfactual AI explores “what-if” scenarios by estimating outcomes under hypothetical conditions that didn’t occur. These approaches are critical in fields like healthcare, economics, policy analysis, and AI-driven decision systems, where understanding true causes is essential for effective operations.

What they do: Figure out not just what happened, but what would have happened if something else occurred.

Where they Struggle: Causal inference and counterfactual AI struggle with unobserved confounders and data limitations, which can bias results and make it hard to isolate true causal effects without strong assumptions. Just like us mere humans, this means that these techniques are equally susceptible to those black swan events that haven't been seen before. If a meteor strikes Manhattan tomorrow and no one knew it was coming, neither did the AI model.

The one sentence use case: You have data about the real world (customers, events, etc.) and you want to be able to understand what drives specific outcomes and plan for a specific result.

How to use for insights? Probably the most interesting models on the list for insights professionals. These models are designed to make insight prescriptive and diagnostic. Causal and counterfactual models are pivotal for generating truly strategic insights. They push the insights field towards understanding the "why" behind observed phenomena and predicting the consequences of actions. While challenging to implement correctly, the ability to answer questions and reason about "what ifs" provides a powerful foundation for data-driven decision-making.

Marketing effectiveness: The holy grail of advertising spend. The means through which all ad spend is justified. Use these models to not only understand what’s working in a campaign but scenario plan for the next campaign with historical data.

Product analytics: Figure out what product features are driving what impact. Did the new packaging drive purchase or was that a coupon event?

Consumer targeting: Scenario plan for what intervention will cause a consumer to convert in the purchase funnel? Will it be an ad target, a discount, high frequency, personalized creative?

Root cause analysis: Why did website traffic dip? Was it the new design, the ad creative, or the turnaround on shipping time?

Media planning: Scenario planning various media plans designed around curated outcomes.

Accessibility:

Model Maturity: Relatively New

Talent Pool: Scarce

Implementation Complexity: Somewhat Difficult

Want to learn more? This video provides a high level overview of Causal Inference as a concept and this video provides how ML is brought to the table. Counterfactual modeling is still evolving so not a ton of sources of content that is approachable. This video is probably the best place to start.

Convolutional Neural Networks (CNNs)

When you hear CNN in a conversation with a data scientist they're not talking about cable news, they're referring to one of the most interesting foundational AI techniques that is changing the world. Unlike LLMs this techniques application in your daily life is not immediately apparent, but they've been around for quite some time. CNNs are the technology that is making cell phone photography rival the best high end cameras. The magic eraser that removes an unwanted person from a cell phone photo? That’s a CNN at work. CNNs are able to break a photo up into multiple parts analyze each part separately, understand what each part is, and understand how it connects to the larger whole. Cars that can drive themselves and see objects in the road? That’s a CNN. These models are all around us powering computers to see the world in the same way we see the world.

Best examples: Google Photos Magic Eraser, Tesla Autopilot, Apple's Face ID

What they do: Spot patterns in images. They’re the original engine of the computer vision revolution.

Where they struggle: CNNs are picky eaters when it comes to image data. They do great with clear, consistent visuals (like cats vs. dogs) but get confused when images are noisy, altered, or come from unusual angles. A small change, like shifting a pixel, can throw them off completely. They love deep rich datasets (which also get them into trouble).

The one sentence use case: You need a computer to be able to see things in images or the physical world and make decisions based on what it sees.

How to use for insights? This class of models is especially good at understanding visual stimulus. Most computer vision applications have this or similar tech inside. Within the insights space this can make traditional visuals something analyzable:

Ad testing: Use a CNN to look through an ad and isolate the various elements of the advertising. This allows you to turn that ad into data that can be run through models to predict effectiveness.

Social media monitoring: Want to know if a product is showing up in social media posts or videos? A CNN can look through those videos and images for things that brands find interesting (logos, product packages). Not only that the CNN can help spot trends or provide context so the brand knows what they’re being associated with.

Product review monitoring: Are people posting negative reviews showing products broken or dysfunctional? CNNs can spot the brand, help understand the context and map the issues (both bad and good).

Facial analysis: Determine user emotion in response to stimulus, identify if someone’s looking at an ad/TV/etc. Analyze human response in focus groups.

Pattern analysis: Monitor shopping behavior from video feeds. Where on the shelf do people look, how do they navigate the store, what products do they pickup and investigate, immediately add to cart or ignore.

Receipt processing: Help decipher receipt data for analysis. Determine purchase location, items, prices, etc.

Accessibility:

Model Maturity: Fairly Mature

Talent Pool: Abundant

Implementation Complexity: Easiest

Want to learn more? Best high level overview of how this works is from IBM and can be seen here. To learn more about how it works under the covers there’s a great set of examples from Adam Harley from Carnegie Mellon.

Generative Adversarial Networks (GANs)

GANs have also been around for some time now. They're the models that sit behind the scenes at companies like OpenAI that help ChatGPT generate images from text prompts. These models are also used in those fancy Snapchat filters that make you look older, younger or like a cat. These types of models have certainly found a niche in an application to AI generated art. But human art isn't the only thing they're good at copying, they're also good at copying speech patterns and are often deployed in text to speech applications.

Best examples: OpenAI image generation, ElevenLabs text to speech.

What they do well : Quite simply a GANs model is a competition between two competing models. One model tries to fake something (like an image), another tries to spot the fake. They go back and forth and improve by competing. This is great for copying styles from a known set of training data (e.g. human faces)

Where they struggle: GANs are notoriously tricky to train. It’s like playing tug-of-war, if one side gets too strong, the balance breaks. They can also struggle with generating structured, logical outputs (like a coherent spreadsheet), and often mess up fine details (think distorted hands or text).

The one sentence use case: You need an approach that allows you to make a facsimile of something that is as good as the inspirational context.

How to use for insights? Think of GANs as dueling models that get better over time at outsmarting each other. In the insights field GANs have a robust use case in supporting multiple parts of the industry. Here’s just a sample of how they can be employed:

Fraud detection: Train a GAN on "normal" data. New data points can then more easily be identified as "fake" or users that the generator struggles to reconstruct accurately are likely anomalies worth inspecting further.

Synthetic samples: Train the model on existing (limited) data to generate more synthetic samples that follow the same underlying distribution. This will help boost sparse areas of your sample.

Open data: Make more underlying research data open to customers and marketers by using a GAN to create samples that mimic the original data and prevent private consumer data from being shared.

Anomaly detection: Similar to fraud detection, use a GAN to spot when data differs from expected results to highlight subtle unseen changes that could indicate new trends, etc.

Marketing mix/financial modeling: Use a GANs trained on market data to predict the likely outcome based on a hypothetical media allocation in the market. Extend the use case to data trained on company performance to predict financial outcomes.

Product innovation prototyping: Generate synthetic product designs for review and further research

Accessibility:

Model Maturity: Maturing

Talent Pool: Moderate

Implementation Complexity: Somewhat difficult

Want to learn more? IBM to the rescue again for an overview of GAN.

Diffusion Models

It's fair to say that when it comes to AI generating content (music, images, etc.) GANs is no longer the cool kid in town, now its all about Diffusion. They work from a huge training dataset. Imagine if you started to create a picture of a puppy by blending 1000 puppy pictures together - it'd be a mess. But if you can take away the parts that don't fit and put together the parts that do from across all of the photos you could generate a new puppy picture by pulling from all of the images in your pile of photos. They're great when you have a lot of training data that can be used to create artificial versions based on that data. Diffusion models are similar to GANs in what they accomplish, and can be a little slower however they are less likely to mess up in the same ways as a GANs might (rendering fingers).

Best examples: Snapchat/Instagram filters, Music Generation, StableDiffusion

What they do well: Start with noise and reverse it step-by-step to generate stunning outputs. Unlike GANs diffusion models will be more creative and able to create content that is an extension of the training data not just a facsimile of the training data.

Where they struggle: Diffusion models can be slow and resource-intensive, often taking seconds or minutes to generate outputs and requiring powerful computers. They also struggle with complex prompts, producing artifacts like blurry spots or incorrect details, especially when instructions are vague or involve rare combinations.

The one sentence use case: Same as GANs - You need an approach that allows you to make a creative extension of something that is as good as the inspirational context.

How to use for insights? It would be fair to say that most of the use cases for GANs also work well as use cases for Diffusion Models - i.e. rinse and repeat. In the AI community Diffusion is the more popular model while GANs are waning in popularity, however GANs are usually better with insights style data (for now). Here’s some insights use cases:

Synthetic data generation: The training process tends to be more stable than GANs, potentially leading to better data diversity.

Mock-up creation: Create hyper realistic product mock-ups for further research.

Advertising generation: Use feedback from consumer data to create sample advertisements both images and video.

Consumer avatar creation: Use to create visual representations of the consumer grounded in insights data which help marketers better empathize with the customer.

What if analysis: Use a model trained on typical consumer data and have the model generate a likely outcome based on specific changes in the underlying dataset.

Advanced imputation: Diffusion models are especially good at filling in missing details. Diffusion can be used as a more sophisticated form of data imputation to fill in missing details in aggregate datasets.

Accessibility:

Model Maturity: Maturing

Talent Pool: Moderate

Implementation Complexity: Very difficult

Want to learn more? This is a relatively approachable video on Diffusion.

Reinforcement Learning (RL)

The idea of reinforcement learning goes back decades. It is also the most relatable since it's the technique we use to learn how to navigate the world as babies and children. Try something over and over until you get it right, guided by positive and negative feedback. Reinforcement Learning is a type of machine learning where an agent learns to make decisions by interacting with an environment to achieve a specific goal. This is about learning through trial and error, guided by rewards or penalties. The agent observes the environment, takes actions, and receives feedback in the form of a reward signal, which it uses to improve its decision-making over time. These models being computer generated can be deployed in a lab to conduct a virtual task an unlimited number of times in a rapid fashion.

Best examples: Recommendation engines, AI controlled NPCs in video games, smart thermostats

What they do: Learn by trial and error. If the AI does something right, it gets a reward and learns to do it more.

Where they struggle: Reinforcement Learning struggles with sample inefficiency, often requiring millions of interactions to learn, which can be slow and impractical in real-world settings. It also faces challenges with reward design, as poorly crafted rewards can lead to unintended behaviors, and training can be unstable in complex environments. Computers don't yet do learning in this manner nearly as well as babies and puppies.

The one sentence use case: You know the outcomes you're looking for and want a computer to be able to learn how to generate those outcomes.

How to use for insights? This approach has a lot of applicability to the insights space. There’s very little of the insights data creation and interpretation funnel that can’t benefit from Reinforcement Learning.

Respondent selection: Use this model to pair research tasks the respondents most likely to be qualified to complete the task (e.g. take a survey).

Personalization: Create a better experience for panelists or respondents by personalizing the experience of participating in research to their needs.

Dynamic testing: Use these models to run sophisticated experiments that continuously alter the experiment parameters until the best outcome is determined. Think dynamic price testing where prices and packages are changed dynamically until the model learns what works best. Can be applied to other use cases like ad testing. Can be implemented as a continuous testing environment… imagine always on content A/B testing.

Agent based optimization: Implement a reinforcement learning agent that can help make dynamic suggestions for how to allocate media dollars to capitalize on the current trends. Think of it as a mix modeler in your pocket monitoring trends and spend.

Adaptive surveys: A survey that monitors respondent engagement and learns to ask the right questions to maintain high levels of attention.

Insights automation: Use to generate insights outputs for commonly generated reports. Use humans to “train” the model by providing positive feedback when the insights are good and negative feedback when the output is bad.

Accessibility:

Model Maturity: Maturing

Talent Pool: Moderate

Implementation Complexity: Somewhat difficult

Want to learn more? Reinforcement Learning is described well in this video.

Self-Supervised Learning (SSL)

This technique is the evolution from supervised learning. Supervised learning happens when you give a computer a problem with easy to understand data and an example of how that data can be used to solve a problem. This is the same way we learn math in school. We use well "labeled" inputs (e.g. cost of apples=$2, apples sold per hour=4) and teach the student how to use those inputs to solve a problem (e.g. money earned in an hour).

Self-supervised learning (SSL) is a type of machine learning where a model learns to make predictions or understand data without explicit labeled outputs, unlike supervised learning, which relies on input-output pairs (examples). Instead, SSL creates its own “labels” from the data itself by designing pretext tasks, artificial problems the model solves to learn useful representations. These learned representations can then be used for downstream tasks, like classification or generation. SSL is the evolution of learning, (imagine learning math without being previously told the price of apples is important) offering a powerful way to leverage vast amounts of unlabeled data, which is abundant in the real-world.

Best known examples: Siri responding to your specific voice or accent when you say "Hey Siri", TikTok being able to design a For You page based on your preferences for certain types of videos.

What they do: Learn from data by making up puzzles. The more puzzles they make up the more they understand the data and the more they can use the data. It’s how many modern models get smart.

Where they struggle: Self-supervised learning struggles with high computational costs, requiring powerful hardware and significant energy to pre-train on large datasets. Until you do the pre-training the models don't work very well. It also depends on carefully designed pretext tasks, as poorly chosen tasks can lead to weak or irrelevant representations that don’t transfer well to practical applications.

The one sentence use case: This is the perfect technique for legacy businesses that didn't invest in structured data warehouses. If you have a lot of data that's not necessarily well described but could be used to create value SSL is for you.

How to use for insights? SSL’s are a great boon to the insights field for one particular reason - most real-world data is not well structured for using in AI applications. SSL algorithms create their own understanding of the data from the data itself. No humans needed, just data, which is great considering how much insights info is locked in proprietary systems or a million surveys with slightly different wording.

Reconciling surveys: Take 500 custom brand tracking studies and create a unified understanding of how brands grow without needing to completely standardize.

Semantic search: Improve insights data retrieval by using a SSL model which understands the context of the data and doesn’t need to match directly on keywords.

Segmentation on steroids: Create a new approach to customer segmentation which relies on a SSL model to figure out segments that fit together naturally through an understanding of the underlying data. Can be used to uncover new insights and findings.

Enhancing models: Take messy real world data (e.g. thousands of: focus groups, surveys, transcripts) and use a SSL model to add context (labels) needed to make that data work for other AI applications (e.g. any other model on this list).

Anomaly detection: SSL models learns the normal parameters of a dataset so when things don’t fit those parameters you have an anomaly to investigate.

Accessibility:

Model Maturity: Maturing

Talent Pool: Moderate

Implementation Complexity: Somewhat difficult

Want to learn more? This video on Self Supervised Learning provides a high level overview.

Graph Neural Networks (GNNs)

Graph Neural Networks (GNNs) are a class of machine learning models designed to process and analyze data structured as complex relationship graphs (think network). These consist of nodes (representing entities, like people or molecules) and edges (representing relationships, like friendships or chemical bonds). GNNs excel at capturing the most complex types of relationships in graph-structured data, making them ideal for tasks like social network analysis, recommendation systems, molecular chemistry, and traffic prediction. GNNs are all about understanding connections so they leverage the connectivity to learn.

Best examples: Drug discovery that is able to uncover new molecules to improve health. That friend algorithm in your social media app that does an uncanny job finding people you know. Google Maps ability to predict the best route from origin to destination while taking into account traffic along the way.

What they do: Understand relationships and connections in data, like how people or things are linked.

Where they struggle: Graph Neural Networks struggle with scalability, as processing large graphs with millions of nodes is computationally expensive and often requires approximations. They also face issues with generalizing deep in the graph where nodes become too similar, and handling dynamic graphs that change over time.

The one sentence use case: If you have data that's connected in some way and you want to leverage those connections to make predictions.

How to use for insights? GNNs are a cornerstone model for deriving insights from connected data. They enable you to move from analyzing isolated data points to understanding the rich relationships that often drive key outcomes. Through learning the structure of connections, a GNN can unlock a deeper, more contextual understanding of complex relationships. Much of the insights field revolves around understanding relationships between various data points so GNNs are a great application to be aware of.

Advanced segmentation: Identify groups of customers with similar behaviors, preferences, or social connections. This gives you a more nuanced segmentation than attribute-based methods. Use this to define what defines a consumer base.

Insights Generation: Create novel new insights based on difficult to spot relationships between various cohorts of data. When paired with LLMs allows for reasoning that can substantially complement the typical data analyst.

Influence tracking: Deploy in marketing activation by using across touch points to determine influential customers or products within the network that drive trends or purchases.

Fraud networks: Fraudulent behavior often isn’t just one bad actor or respondent, it can be one person puppeting multiple accounts. Use GNN to identify the network of larger scale fraudulent behavior (e.g. botnets, etc.).

Criticality analysis: Understand the parts of the marketing or supply ecosystem that are critical to the whole network functioning. When combined with other approaches it can be used to scenario plan for changes in strategy or unforeseen circumstances.

Accessibility:

Model Maturity: Maturing

Talent Pool: Scarce

Implementation Complexity: Somewhat difficult

Want to learn more? This video is a good overview of GNNs with a particular focus on drug discovery.

Multimodal Models

When large language models were first created people loved them, but soon realized they wanted to interact with the models in the same way we interact with other people, not just through text communications but with photos, articles, music, spoken language, etc. From this demand came multimodal models. Multimodal models are advanced machine learning models designed to process, understand, and generate outputs from multiple types of data, such as text, images, audio, video, or even sensor data, within a single framework. Unlike traditional models that handle one modality (e.g., text-only language models or image-only convolutional neural networks), multimodal models integrate and reason across diverse data types, capturing richer context and enabling more human-like intelligence. These models are a cornerstone of modern AI, powering applications like virtual assistants, content generation, and autonomous systems.

Best examples: Newest version of Google Gemini: Take a photo and the model tells you what is in the image.

What they do: Mix different types of data—text, images, audio—and reason across them.

Where they struggle: Multimodal models struggle with high computational demands, requiring significant resources to process and align multiple data types simultaneously. They also face challenges with data quality and cross-modal interference, where noisy or misaligned datasets and modality imbalances can lead to sub optimal performance.

The one sentence use case: You want to build a solution that considers multiple forms of data and reasons how to make use of that data to deliver value.

How to use for insights? A natural evolution of the AI modeling space, multimodal models are relatively cutting edge and offer a lot of value to the insights community. Understanding consumers requires models that can know not just how to read text but also interpret tonality in speech and facial expressions.

True sentiment analysis: Complete sentiment analysis of large scale consumer data and better interpret edge cases such as irony, sarcasm, puns, memes and satire.

Data contextualized ethnography: Combined analysis of consumer videos/photos of a use case together with quantitative data from consumer studies. E.g. people buy a lot of baking soda and half the time it ends up in the fridge.

Next level CX: Combine data from site analytics, audio from call centers, user generated content and online posts/reviews to create real time tracking of customer experience.

Multi-modal analysis: Analyze performance of products/advertising/media using visuals, audio, and consumer reaction data to disentangle elements that have the greatest impact.

Advertising creative generation: Create new video and audio advertising built on consumer data and historical brand assets.

Enhanced visual search: Create a more engaging search experience which transcends text to images. E.g. find all advertising for “Coke” regardless of whether Coke is mentioned in the ad copy or just shown in an image or video.

Accessibility:

Model Maturity: Maturing

Talent Pool: Moderate

Implementation Complexity: Very difficult

Want to learn more? Most of us have seen Multimodal AI but here’s a more nuanced overview of what makes AI multimodal.

Neural Radiance Fields (NeRF)

One of the coolest and newest models to the scene are a cutting-edge class of machine learning models designed to reconstruct and render highly detailed 3D scenes from a collection of 2D images. When you look out your window you can imagine what the other side of tree you're looking at looks like, with NeRF and some training data so can computers. NeRFs model the geometry and appearance of a scene by learning how light interacts with objects in 3D space.

Best Examples: Rendering 3D versions of spaces from photos, Computer gaming, Image to Video

What they do: Turn 2D images into 3D scenes.

Where they struggle: NeRF models struggle with high computational costs and slow rendering, requiring powerful hardware and limiting real-time applications. They also need many high-quality images and falter with moving objects or changing lighting, lacking flexibility for dynamic or sparse-data scenarios.

The one sentence use case: You have a lot of images and need to render them into interactive (virtual) content, or videos.

How to use for insights? NeRF models are likely the least directly applicable to the insights field. Currently, they are the darling of the game/video production and VR fields but over time we’ll see more applications for the insights industry. For now there’s only a couple key applications.

Rendering products: Take 2D versions of product images and create 3D models that enable consumers to interact with them more immersively.

VR/AR research: Create 3D environments to allow customers to immersively explore in VR or AR. Virtual store testing, shelf layout analysis, etc.

Immersive placement testing: Create 3D versions of products to place dynamically into video content to test novel placement options. For example: place a soda can virtually into 50 different video podcasts to test which generates the best consumer response.

Accessibility:

Model Maturity: Relatively New

Talent Pool: Scarce

Implementation Complexity: Very difficult

Want to learn more? One of the most visually interesting of models. Check out this quick 2 minute overview of the tech.

Coming Soon: What’s Next In AI Models

It’s hard to say what will be the next big tech in AI because the large AI innovators love to surprise us with mind blowing new models that have been hidden in the lab for a while (looking at you Veo 3). However, if we look at the insights industry and what’s starting to scale there are a couple key technologies to keep an eye on:

Web-Scale Retrieval-Augmented Generation (RAG)

When you go down the rabbit hole on AI systems you quickly learn about the concept of context window. So while RAG has been around for a while, web-scale RAG is the promised land. It’s like chatting with an AI that’s allowed to read the entire internet while it talks to you, and can remember everything it just read, even if it’s super long.

Analogy: Imagine a librarian who can instantly scan every book, website, and document in the world, find just the right paragraphs, and hand them to a super intelligent editor that crafts a perfect response on the fly.

Why it matters: Most AI tools still rely on memory and pre-training. Web-scale RAG lets models pull in fresh, relevant knowledge in real time. This is the future of on-demand intelligence, think AI analysts that monitor markets, media, or consumers as things happen, and generate insight reports instantly.

Where it’s happening: You.com, Perplexity, OpenAI’s GPT-4 Turbo with long context, and efforts from Anthropic and Meta are racing to push the boundaries of large-scale retrieval and generation. Academic work is coming out of Stanford, the Allen Institute for AI, and the University of Washington.

Neurosymbolic AI

The challenge with a lot of AI today is the models provide great answers but don’t always tell you how they got there. This AI model mixes brain-like learning (neural networks) with rule-following logic (symbols). It can both learn from data and follow instructions, like doing your math homework and explaining each step.

Analogy: Imagine a detective team where one partner is a brilliant gut-instinct type who notices patterns others miss (the neural network), and the other is a strict rule-follower who builds logical timelines and checks alibis (the symbolic logic engine). Separately, they’re good. Together, they solve cases with both creativity and clarity.

Why it matters: Insights teams want both smart automation and traceable logic. Neurosymbolic AI offers more transparency, which is key for generating explainable results in regulated or high-stakes research contexts. This will be critical for automating insights generation.

Where it’s happening: MIT-IBM Watson Lab, Stanford's Human-Centered AI Institute, and DARPA's Explainable AI program are major players here.

Meta-Learning / Few-Shot Learning

Looking through the examples I provided above you’ll quickly find that the big hurdle for implementation is almost always the training or tuning of a model. Most AI needs thousands of examples to learn. Meta-learning teaches AI how to learn quickly, just a few examples, and it gets the idea. Being able to learn more quickly will open the door to companies with smaller datasets or only partially labeled data to take advantage of AI.

Analogy: It’s like watching someone tie a shoelace once, and then figuring out how to do it yourself.

Why it matters: This is game-changing for niche or low-data segments, a new product line, emerging customer trend, or small panel. It lets you deploy AI where you don’t have mountains of data.

Where it’s happening: Research is booming at Google Brain, DeepMind, OpenAI, and the University of Toronto.

Federated Learning at Scale

These days privacy is part of the public consciousness. IAPP estimates suggest that 82% of the global population is covered by some form of privacy regulation. If AI models are more accurate with more data how can you train them in a data privacy centered world? That’s where federated learning comes in. Instead of sending your data to one big server, the AI learns right on your device and shares just what it learned, not your personal info. It’s smart and private at the same time.

Analogy: Picture a bunch of students studying for the same test, but they’re not allowed to share their notes. Instead, each one studies on their own, figures out what tricks work (like “draw a diagram for this part” or “use a rhyme for that list”), and sends just those tips to a group chat. Everyone gets smarter together without ever sharing their private notebooks.

Why it matters: The insights field today is already working to manage privacy barriers. For customer research and behavioral data, federated learning lets you tap into edge data (like phones or surveys on apps) without violating privacy. It supports compliance while expanding your reach.

Where it’s happening: Google (for Android), Apple (on-device AI), and Carnegie Mellon University are pioneering large-scale federated systems.

Intent-Based Modeling

Insights are best consumed when they feed into the decision making engine of a business. Historically this is a difficult process because insights practitioners often focus only on the facts of the data and try not to over interpret findings. This kind of AI opens new doors by guessing what someone’s really trying to do, even if their actions are messy. It’s less about “what they clicked” and more about “what they wanted.”

Analogy: Imagine getting in your car at 8:00 a.m. on a Tuesday. You don’t type anything in, but your GPS already suggests the fastest route to your office. Why? Because based on your past patterns, it knows where you’re probably headed even if you haven’t told it. That’s intent-based modeling: predicting your goal from your behavior, not just waiting for your interaction.

Why it matters: This is gold for customer journey analysis, churn prediction, and targeting. It helps brands serve real needs instead of just reacting to facts.

Where it’s happening: Adobe Research, Stanford HCI, and Meta’s behavioral science labs are pushing this work, often tied to personalization and UX modeling.